Face Averaging

Who is the meanest person?

Face averaging is a novel concept that smashes a few newer technologies with some ancient ones. It all started for me about 2 years ago when I discovered a hospitality business had a public dataset of their employee's photos available online. That's a lot of information, and I wanted to turn it into something meaningful, which is when I arrived at the idea of smashing all 30000 employees into one picture.

I'm sure we've all seen a face morph at some point, this is essentially face morphing on a much larger scale. Come to last month, I'm on the train to work and something interesting came to mind. "I wonder what the average Sydneysider looks like" and a plan quickly came together. Due to the density of the city, I'm walking past a lot of faces in any given day. If I slipped my phone into my breast-pocket I could easily film all these faces without looking too out of place.

You could argue it's a privacy concern covertly filming people but it's perfectly legal here if it's for personal use and it's in public. Tick and tick. Very quickly I held hundreds of gigs of video of the people around Sydney, now to put it to use.

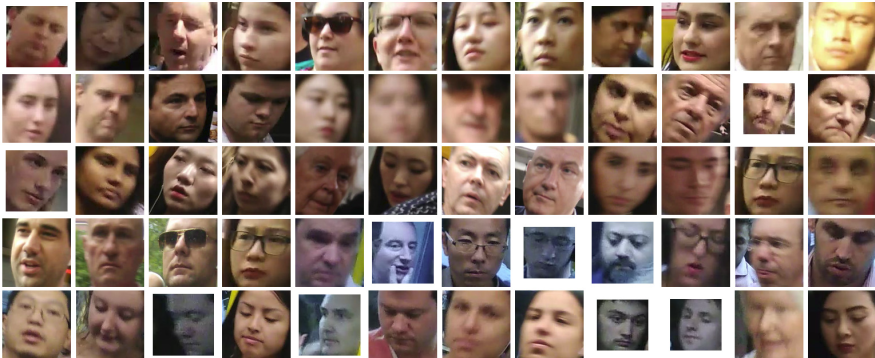

I needed to suck out faces from the video which involved breaking the video into frames meaning that each second of video netted me 60 photos. It adds up quickly. I figure, to save me time and space, I'll only get every 15th frame or four a second. With these frames, we throw them into a machine learning neural network trained purely on detecting faces in a photo. It did its job a little too well, it got faces that I couldn't even see, so I limited extraction to a minimum size.

This sounds nice and easy until I realised that if a person is in more than one frame, they'll be saved a couple times, and that's going to skew the average. So not only do I have to detect faces, I have to detect if we've seen it before. To remedy this, when I grab a face, I calculate its fingerprint (a hash that will be the same even if the face isn't in the same position or rotation) and compare it with all other fingerprints to see if we've seen it before. Problem solved.

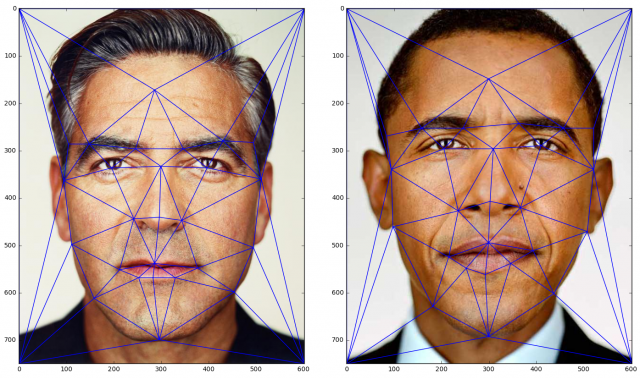

Remember when I mentioned this used some older technologies? Well that's where Delaunay triangulation comes into play. Curtly, it's mapping a bunch of points in such a way that triangles are formed with no other points inside. We use this with another neural network specifically for mapping out 68 points on a human face. We're talking points like "left eye corner" and "tip of nose". Once we've fed all our images into this network we have a bunch of face photos and a relevant text file identifying the position of the 68 points in the image.

From there, it's essentially just "squish and stretch the photo around these points in such a way that all faces are exactly aligned". With all faces aligned then we can divide up the opacity so each photo has an equal portion which results in our final blended image of a single, average face.

It was only at that point I realised "I didn't have to go through all this trouble, Google images exists. So I went back and edited the script in such a way where I can feed it a page of Google Image results as the faces to average. This allowed me to quickly make the facial average of "Australian male" or "New York City female" or anything.

If the nitty gritty script and implementation tickles your fancy, or you just want to give her a spin yourself, I implore you to give it a geese! Otherwise enjoy this photo of all US presidents.